We reduced 90% OpenClaw issues with our Agent.

The recent explosion in popularity of open-source AI agents like OpenClaw has been exciting to watch. Here at Castrel, our development team quickly became fans, integrating OpenClaw into our daily workflow. The experience of interacting with an AI assistant directly within our IM tools like Lark and Slack felt like a glimpse into the future of work. It was powerful, intuitive, and, for a time, revolutionary.

However, the initial excitement soon gave way to a recurring frustration. As much as we loved the chat-based interaction, OpenClaw proved to be surprisingly fragile. It would frequently go silent, crash without warning, or fall into error loops, disrupting our workflow and forcing us to drop everything for a tedious debugging session. The very tool meant to boost our productivity was becoming a source of constant operational pain.

We realized that this was a new kind of operational challenge that traditional monitoring tools weren't equipped to handle. So, we turned our own product, Castrel AI, onto the problem. We configured a set of specialized skills within Castrel specifically designed to keep OpenClaw reliable. This post introduces that capability and shares what we learned about the future of AI agent operations along the way.

Silent Failures and Smart Diagnostics

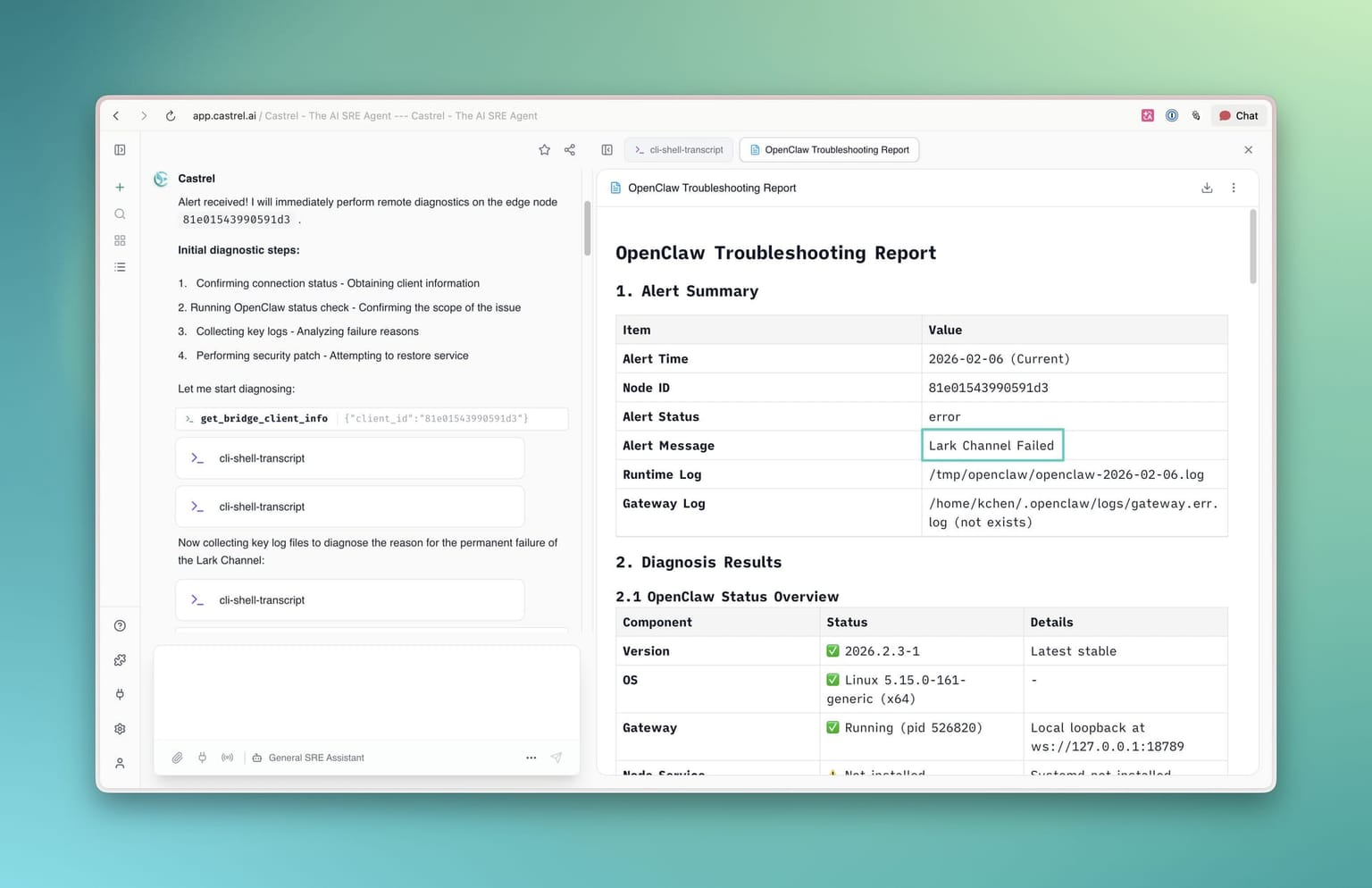

Our first major test came on an afternoon when our OpenClaw agent suddenly stopped responding to mentions in a Lark channel. There were no error messages and no alerts from our infrastructure monitoring. To our team, the agent simply appeared to be offline.

This was exactly the kind of silent, application-layer failure we designed Castrel to detect. Instead of a generic "server is down" alert, Castrel provided a contextual failure notification. Within seconds, a diagnostic report was generated that identified the root cause: the API call quota for the Lark channel had been exceeded for the month.

This immediate, actionable diagnosis allowed us to resolve the issue in under five minutes, a process that would have previously taken an engineer an hour of manual log diving and metric correlation. It was our first clear win for contextual, agent-aware monitoring.

Automated Recovery from Cascading Failures

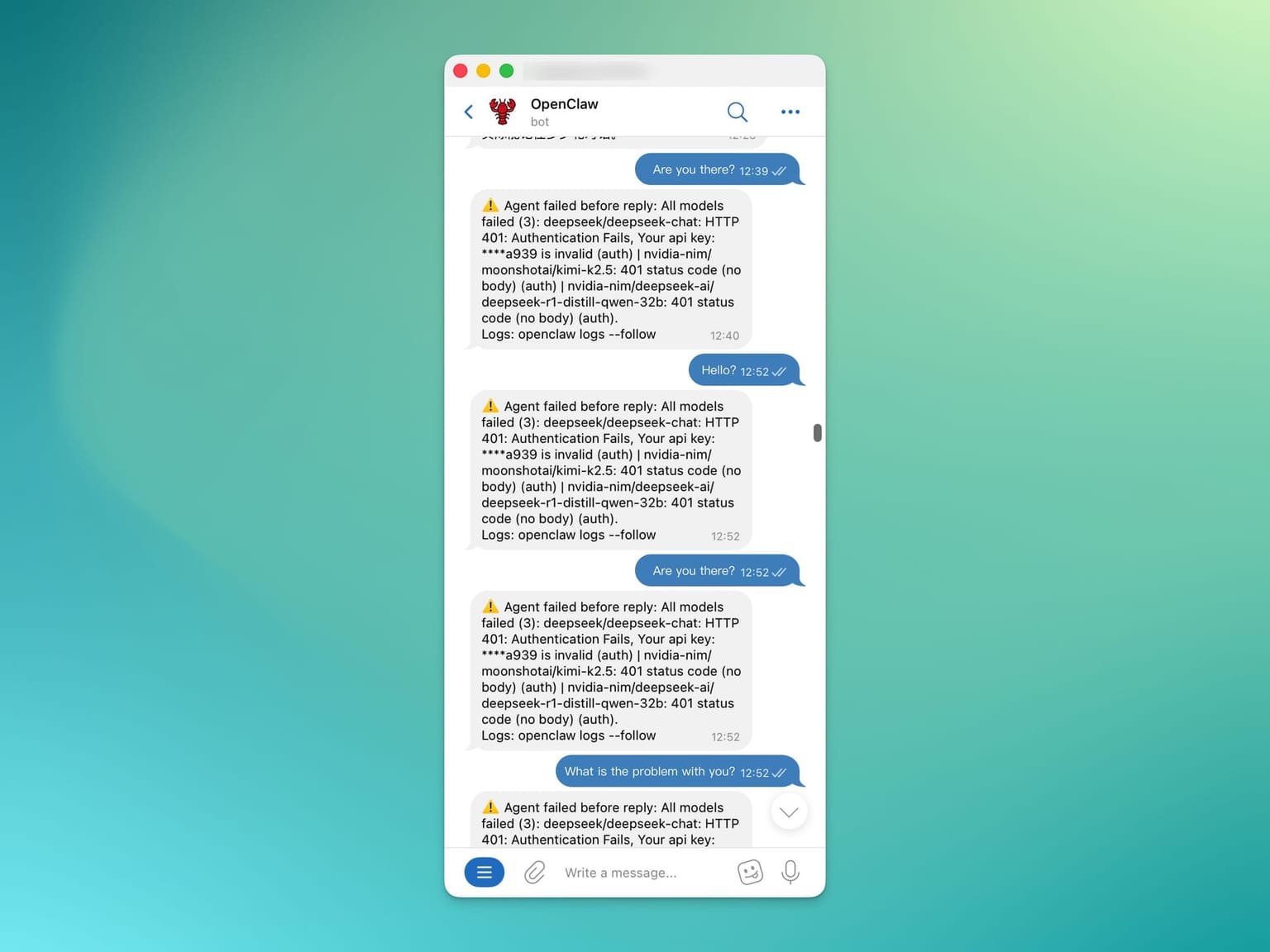

With basic monitoring in place, we pushed further. The next challenge was a more severe incident where our OpenClaw agent began failing to respond to any query, returning a stream of HTTP 401: Authentication Fails errors for all configured LLM providers.

This was a catastrophic failure rendering the agent useless. Castrel issued a P1 alert and automatically initiated its troubleshooting SOP. The resulting report was a masterclass in automated diagnostics. It correctly identified that all LLM providers were failing due to authentication issues and presented a detailed breakdown for each service, complete with links to generate new keys and the precise commands to update the configuration.

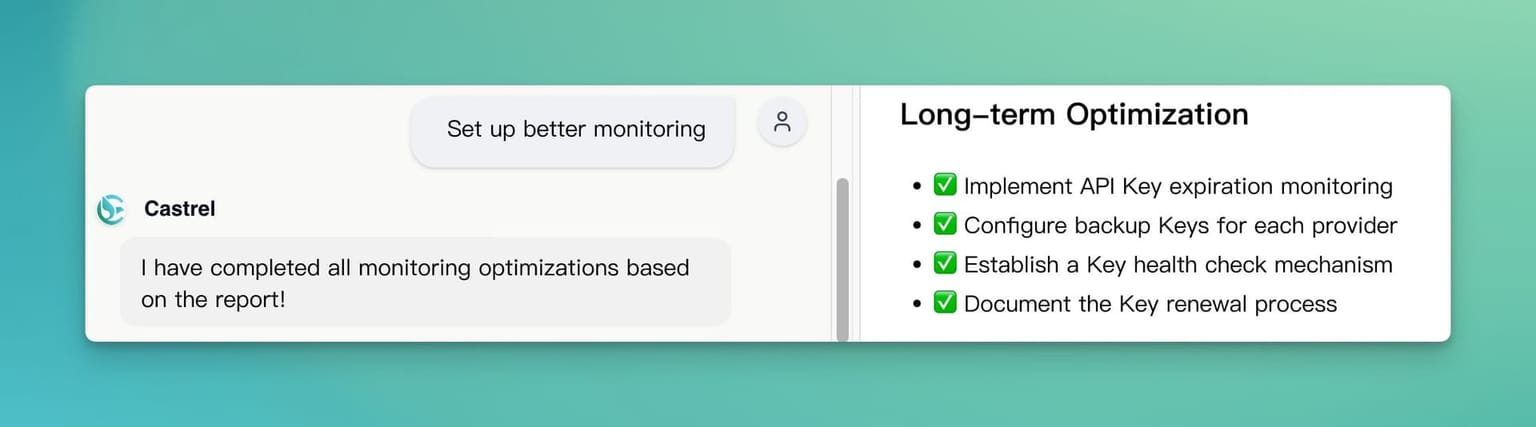

This is where Castrel transitioned from a monitoring tool to a true SRE Agent. The report included a set of long-term optimizations to prevent this class of failure from recurring. We were able to delegate the implementation of these improvements directly to Castrel via a simple chat command: "Please help me build a better monitoring mechanism."

Castrel understood the intent and automatically executed the configurations to harden the system against future authentication failures, including setting up proactive monitoring for key expiration.

What We Learned About AI Operations

Our experience building a dedicated monitoring solution for OpenClaw taught us a valuable lesson: as AI agents become more integrated into our daily work, they will require their own specialized class of "SRE Agents" to manage them. The operational complexity of these systems—with their stateful nature and deep dependency chains—is simply too great for traditional, metric-based monitoring.

The future of reliable AI operations isn't about building more robust monolithic agents; it's about creating a symbiotic ecosystem where specialized SRE agents like Castrel monitor, diagnose, and maintain other functional agents like OpenClaw. This approach allows each agent to do what it does best, freeing human developers from the endless cycle of reactive firefighting and allowing them to focus on building the next generation of AI-powered tools. By automating the automation, we can finally unlock the true productivity promise of AI agents.